Using quantspace

using-quantspace.RmdThe quantspace library implements a flexible approach to quantile estimation which guarentees that quantiles do not cross at any point in the data. This will walk through the basic usage of quantspace, how to change algorithms, use different standard error methods, handle plotting, prediction, and interpreting coefficients.

To start, we load the library:

library(quantspace)

#> Loaded quantspace v0.1, using 3 cores for bootstrap sampling (see ?getCores).

#> Bug reports: github.com/be-green/quantspace/issues

set.seed(1999)To get a sense of how quantspace works, I’ll use some simulated data.

# Design Matrix

X <- matrix(rnorm(10000), ncol = 2)

betas <- c(-1, 2)

# make the scale also depend on X

scale_betas <- c(0.25, 1.5)

err <- exp(X %*% scale_betas) * rnorm(nrow(X))

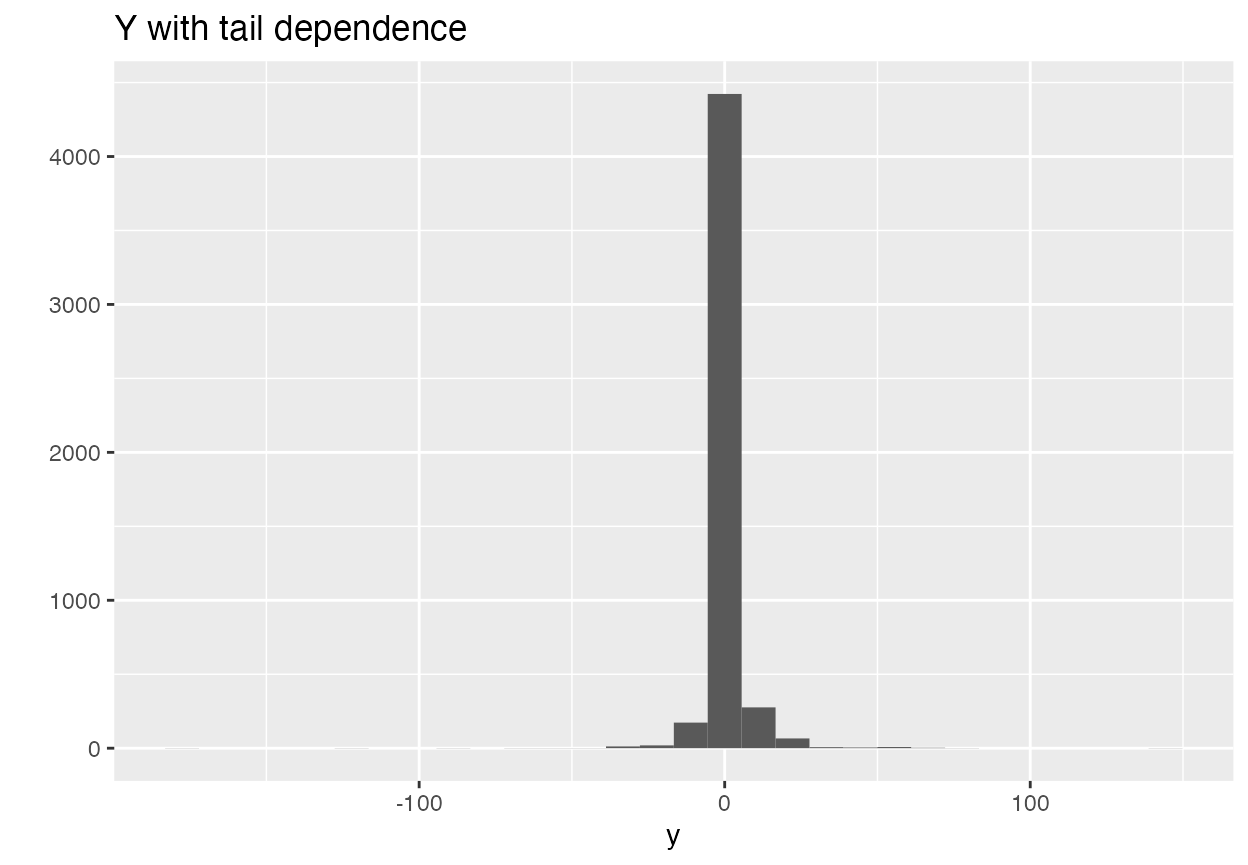

y <- X %*% betas + errThis gives us some fatter tails and some dependence in the tails. Let’s look at y in order to get a sense.

library(ggplot2)

# what does the tail dependence do relative to normal errors

y_no_err_dep <- X %*% betas + rnorm(nrow(X))

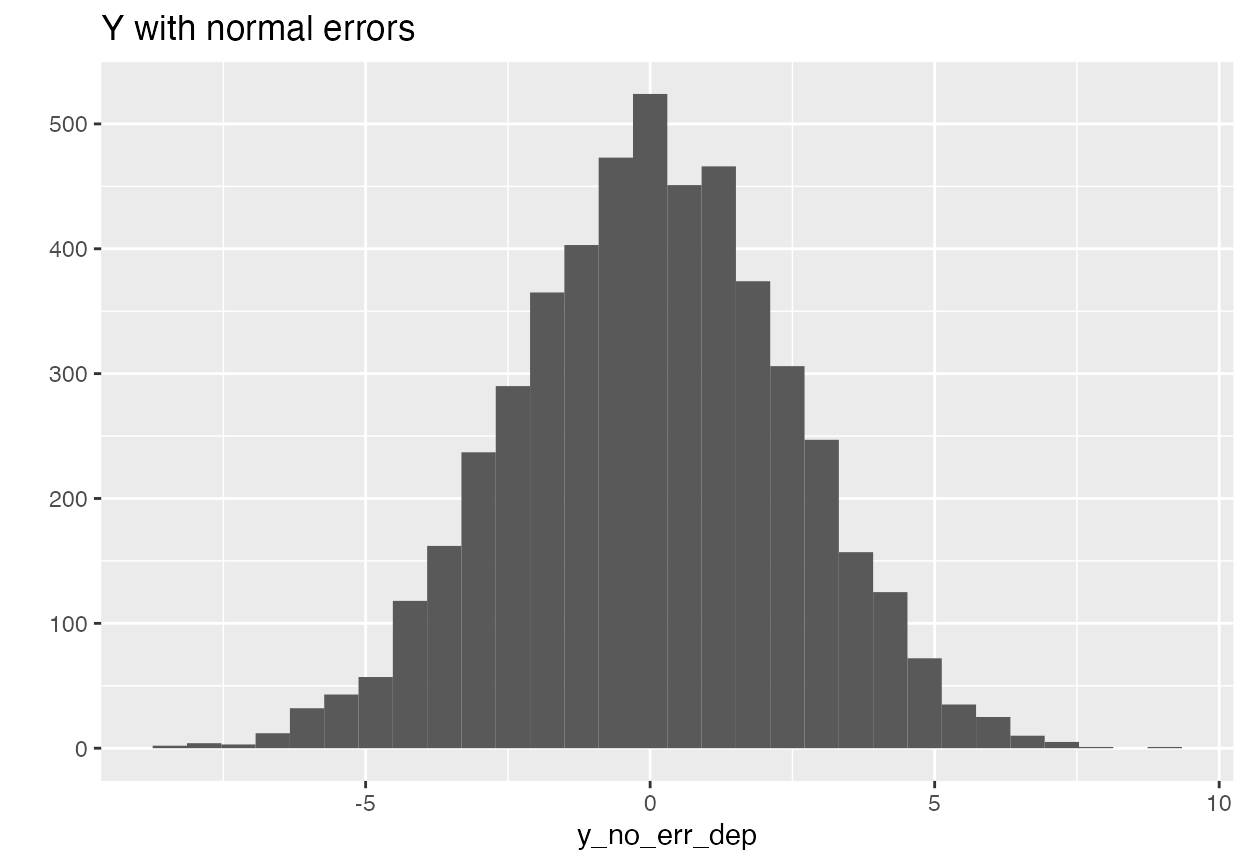

qplot(y_no_err_dep) + ggtitle("Y with normal errors")

qplot(y) + ggtitle("Y with tail dependence")

#> `stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

The qs function

Basic use

Let’s try to get at that info! The main function which estimates models via quantile spacings is the qs function. Let’s check out an example:

regression_data <- data.frame(y = y, X)

# fits a quantile spacings model

# these quantiles are set as the default argument, and you

# can change them as you need to

fit <- qs(y ~ X1 + X2, data = regression_data,

quantiles = c(0.1, 0.25, 0.5, 0.75,0.9),

baseline_quantile = 0.5,

algorithm = "br")

summary(fit)

#> Baseline Quantile Coefficients:

#> Variable Quantile Coefficient SE

#> 1 (Intercept) 0.5 0.01095 0.022040

#> 2 X1 0.5 -0.99270 0.006513

#> 3 X2 0.5 2.00300 0.013130

#>

#> Quantile Spacing Coefficients:

#> Variable Quantile Coefficient Standard.Error

#> 1 (Intercept) 0.10 -0.5113 0.03202

#> 2 X1 0.10 0.2710 0.03509

#> 3 X2 0.10 1.4390 0.03556

#> 4 (Intercept) 0.25 -0.4445 0.02076

#> 5 X1 0.25 0.2789 0.02238

#> 6 X2 0.25 1.4690 0.02513

#> 7 (Intercept) 0.75 -0.4058 0.01942

#> 8 X1 0.75 0.2405 0.01988

#> 9 X2 0.75 1.5070 0.02277

#> 10 (Intercept) 0.90 -0.4606 0.02945

#> 11 X1 0.90 0.2493 0.03245

#> 12 X2 0.90 1.4770 0.03613

#>

#> Quantile Psuedo-R2:

#> 0.1: 0.9158

#> 0.25: 0.8239

#> 0.5: 0.2872

#> 0.75: 0.8258

#> 0.9: 0.9162We’ve already made a couple choices here. First, we set the regression formula, second we specified the data. The ones that are a bit stranger are the quantiles and baseline_quantile arguments. Because the quantile spacings model is a joint model, we need to estimate everything recursively.

To get the 0.25 spacing coefficient, we take the predicted median value, \(\hat{y}_{med}\), and calculate \(r = y - \hat{y}_{med}\). For the positive residuals, we then take log(r) as the outcome variable, and estimate a median regression. For negative residuals, we do the same thing but with log(-r). This guarentees that the quantiles fitted by the model don’t ever cross.

The baseline_quantile is the quantile that we take spacings relative to. We don’t recommend changing this away from the median, which is the default. The quantiles argument is simply the complete list of quantile effects you want to estimate.

The algorithm argument is the algorithm you want to use in order to fit the quantile regression at each stage. This will be covered in more detail later, but the options include all the algorithms implemented in the quantreg package (e.g. “br”, “pfn”, “sfn”) as well as “agd”, which implements accelerated gradient descent over a semi-smooth approximation of the quantile loss function, and “lasso” and “post-lasso”, which are penalized variants of the other algorithms. There will be more to come in the future, and there is an interface for adding your own algorithm which will be documented in a separate vignette. By default, this is “sfn”, which is the fastest method available in the quantreg package for most problems. For large-scale problems, you may see speed gains from using the “agd” algorithm.

Note (June 7, 2021): the interface to the penalized regression variants is likely to change in the near future. Treat as experimental.

There are also s3 methods common to other packages available for basic operations. These include coef and coefficients, which return a matrix of coefficients, predict which returns fitted quantiles (or out of sample predictions via the argument newdata), summary which summarizes the fitted model, and resid and residuals which return model residuals.

# get coefficients

betas <- coef(fit)

# get residuals

res <- resid(fit)

# in sample predictions

pred <- predict(fit)

# out of sample predictions

oos <- data.frame(matrix(rnorm(200), ncol = 2))

pred_oos <- predict(fit, newdata = oos)The qs function also has some control parameters you can see described in more detail on the help page for the function (?qs), including parallel, which determines whether bootstrap draws should be parallelized, calc_se which determines whether to calculate standard errors, and weights, which allows you run a weighted regression.

Advanced Usage

The more sophisticated controls for the quantile spacings model and standard errors are specified via the control and std_err_control arguments. Each of these is specified via calls to the qs_control() and se_control() functions. For details, you can always check the help pages (e.g. ?qs_control).

The qs_control function allows you to set small, the level at which values are treated as zero, trunc, whether to truncate residuals below the value of small, lambda, an optional penalty parameter for use with the lasso algorithm, output_quantiles, which controls whether to save fitted quantiles, and calc_avg_me, which determines whether to calculate average marginal effects. Perhaps with the exception of lambda, none of these need to be changed very often (if at all).

The se_control function allows the user to specify various aspects of the bootstrapping and subsampling used to calculate standard errors. By default, standard errors are calculated via subsampling, with 100 draws. There are several available algorithms for computing standard errors, all based on empirical reweighting and bootstrapping. Setting se_control(se_method = "weighted_bootstrap") will randomly re-weight data based on random exponential weights drawn with rate parameter 1. Using "subsample", which is the default, will construct subsamples of the data (without replacement. The size of these subsamples is set via subsample_percent. Using "bootstrap" will use data sample with replacement the same size as the original sample. To truly customize this, you can set this argument to "rq_sample".

The other se_control parameters allow you to customize the subsampling percent, the number of bootstraps (num_bs), whether to use random exponential weights (draw_weights), whether to sample with or without replacement or to leave the sample alone (sampling_method), and the size of the data at which point the bootstrapping/subsamplign will be done in parallel (parallel_thresh).

In the future, the package may add closed-form standard errors via the delta method, but as of now all standard error methods are based on bootstrapping and subsampling.

Distributional Modeling

The other thing that the quantspace package is very useful for is modeling the full distribution of outcomes. To do this, it contains routines which efficiently interpolate the fitted quantiles via cubic splines. Modeling the log distance away from the median, rather than treating each quantile independently, prevents the fitted quantiles from crossing, which means that we can always recover a valid density by interpolating between them.

Right now this is done with cubic splines, which can (very occasionally) go negative, which would not imply a valid CDF. If that happens, it’s probably a sign that this is a very poor model for your problem or that you have data which is quite small.

So how do we do this? Let’s take our fit from above and interpolate it. Because our model is non-linear, the distance between quantiles will vary with the data. By default, the distributional_effects function performs this interpolation at the average level of the data, returning the pdf, cdf, quantile function, and a random number generator for the fitted density.

d <- distributional_effects(fit)

list(

# evaluate the density at 0

pdf = d$pdf(0),

# evaluate the cdf at 0

cdf = d$cdf(0),

# return the fitted median

quantile = d$q(0.5),

# generate 10 random numbers from fitted density

d$r(10)

)

#> $pdf

#> [1] 0.1397495

#>

#> $cdf

#> [1] 0.4990706

#>

#> $quantile

#> [1] 0.006652388

#>

#> [[4]]

#> [1] -0.2663059 1.3667500 -1.4818322 5.2845205 1.8126936 -3.5019245

#> [7] 1.1443059 -2.6127307 -1.4457594 -0.1550923We can also do this for various levels of the data. Let’s see how these change as we move up in the first dimension of our X variable.

X_levels <- data.frame(quantiles = c(0, 0.25, 0.5, 0.75, 1),

X1 = mean(X[1,]),

X2 = quantile(X[2,]))

# interpolate fitted quantiles at all levels of X1

vary_x2 <- distributional_effects(fit, newdata = X_levels, tails = "gaussian")

plot(vary_x2, tail_level = 0.001)